The Machine Stops: Will AI Lead to Freedom or Control?

How E.M. Forster's dystopia mirrors our AI-driven crossroads

Imagine a world where humanity lives entirely underground. Each person is confined to a hexagonal cell—isolated like bees in a hive. An omnipresent system known simply as “the Machine” anticipates every human need. In this world, people communicate solely through the Machine, view the world through its screens, and derive knowledge only through its databases.

This is the chilling vision E.M. Forster presented in his 1909 novella, The Machine Stops, a cautionary tale of two possible futures for humanity.

On one hand, there is Vashti, a woman who embodies passivity and dependence. She willingly surrenders to the control of the Machine, finding comfort in the decrees of its faceless Central Committee. Yet, in her conformity, she forfeits her vitality.

In contrast, stands Kuno, Vashti’s son, a figure of energy and independent insight. Kuno has an irrepressible, natural hunger. He desires more than the artificial light of the Machine; he craves the warmth of the sun, the touch of grass, and the taste of genuine freedom. Despite the Machine’s incentives and constraints, Kuno yearns for a life of self-sufficiency, free of paternalism.

Today, as AI reshapes our world, we confront a similar choice: Will we proceed on a path of passive dependence, or will we carve out a future where we actively exercise our highest capacities?

Today’s Machine Comes Into View

Forster’s tale begins “in medias res” (in the middle of things)—we don’t know the backstory of why humanity retreated underground or how the Machine rose to power. In contrast, our future is unwritten. We all shape this story, but we must guard against two forces quietly steering us toward creeping dependence.

The first is the emergence of planetary-scale artifacts like recommender systems, which we estimate now mediate ~20% of our waking lives. While enabling valuable and seemingly magical product experiences, they can also cultivate passivity and imperceptibly reshape our understanding of what’s good for us.

The second is the groundswell of proposals for AI governance in response to uncertainty. Well-meaning though they may be, top-down measures that seek to optimize the world for safety or implement a prescriptive notion of justice have the potential to harm freedom and take a huge toll on independent initiative.

From Free Agents to Passive Subjects

What’s at stake if humans are transformed from free agents to passive subjects? For answers, we turn to an unexpected source: Alexis de Tocqueville’s prophetic 19th-century book, Democracy in America.

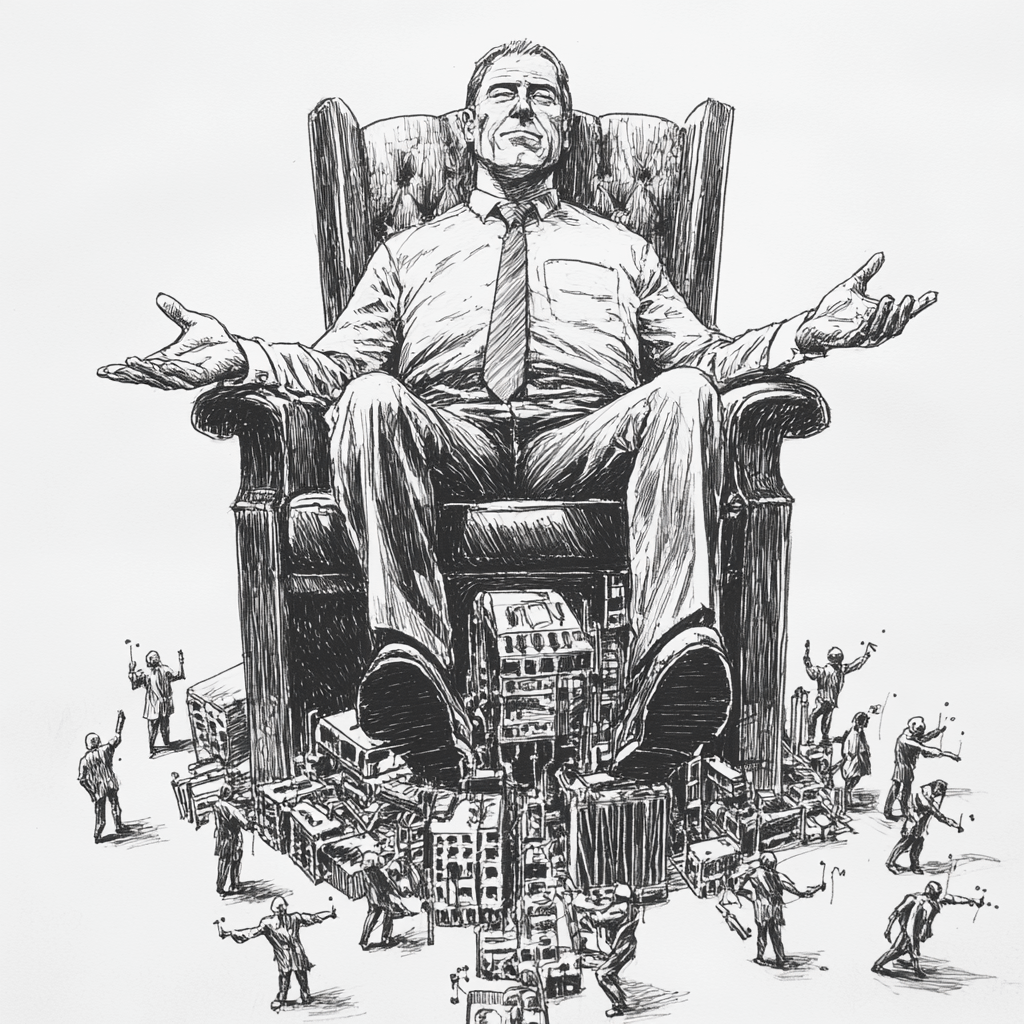

If alive today, Tocqueville might argue that while the great evil of the 20th century was the “hard despotism” of Hitler, Stalin, and Mao, 21st century faces a more insidious threat: "soft despotism.” This manifests as a strong, centralized system that promises safety, convenience, and material equality—all while steadily chipping away at human autonomy.

As AI advances, the allure of such a system could become overwhelming. The danger? Our ability to recognize and resist this subtle threat may fade before we realize what we’ve lost.

But there’s hope. Can we cultivate a philosophy that embraces technological progress while fiercely protecting human autonomy? Can we channel the spirit of Forster’s Kuno—cherishing genuine freedom while adapting to the AI-mediated world we’re destined to inhabit?

I believe we can. It’s why I founded Cosmos: to ensure AI and its governance amplify, rather than diminish, human potential. Our mission is clear—shape a future where the Machine serves human flourishing, not the reverse.

We don't even need to look to the past to know what it is to be "transformed from free agents to passive subjects." Progression of technologies is inextricably linked to those power structures that set forth the rules of price, value, and social status, collectively defining the boundaries any individual's freedom. So long as society has been of a technological nature, free agency remains elusive. Perhaps such a freedom is merely the intermediate goal on a path to something else, which might reveal itself through a latticework of ideas that are collectively telling us we are all part of something big, mysterious and impossible to explain.

Take a look at some of the trends so far. The impact of mobility technology on spatial dynamics has rendered land a scarce commodity, increasing its value while simultaneously reducing the cost of mobility. Similarly, as labor time is compressed, leisure time becomes a luxury, with its price escalating inversely to the decreasing cost of labor. The allure of artificially illuminated screens further commodifies daylight leisure. In response to these shifts, we consciously organize ourselves into hierarchical class structures, reinforcing these power and price dynamics while elevating those who embody intellectual or economic mastery over them. This pattern reflects our persistent tendency towards self-imposed servitude in the pursuit of survival.

One of the greatest threats to human autonomy and therefore flourishing is our own capacity to see through the fog of complexity, and to disillusion ourselves from those constructed features of our lives that bind us to conditions of unfreedom. Sometimes, the binding conditions are not even cultural. For example, our cognitive predisposition to visual stimuli is so pronounced that we rarely allocate enough expensive "free" time to develop skills for looking at the dark interior of ourselves-a practice that so many ancient traditions associate with a fundamental disruption of values-or, enlightenment. But in today's society, where are the images of wisdom we can all aspire towards?

Even before a new philosophy can steer technological governance towards human flourishing, we will need to carve out new contexts (perhaps even so new that they break time-space frames) within which it becomes possible to develop an innate sense of what it is to be a human again.

(we have always lived in the maze.)